MCP: An Introduction to Agentic Op Support

Table of contents

1.1 Introduction

Agents and Large Language Models (LLMs) offer a powerful combination for driving automation. In this post, we’ll explore how to implement a straightforward agent that leverages the capabilities of LLMs alongside common tools to autonomously achieve a goal, showcasing the synergy between these technologies.

1.2 Fundamentals

1.2.1 What is an Agent?

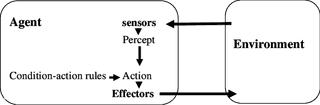

An agent is an AI-driven system that interacts with its environment to achieve a specific goal. It perceives, reasons, and acts autonomously, often using external tools to complete tasks step by step.

For visuals, here is a diagram from an application of multi-agent simulation of traffic behavior for evacuation during an earthquake disaster:

An agent is like a problem-solving model—whether an LLM or a more traditional AI system—that continuously observes, selects the best tool from its "toolbox," and executes actions in a loop to complete tasks. This aligns with the way modern AI agents, such as OpenAI’s DeepResearch, interact with users in a conversational or sequential manner. See also: Open-source DeepResearch – Freeing our search agents.

At its core, an agent consists of two (2) components, a “brain” and a “body”.

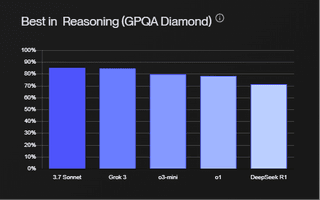

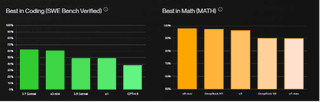

The brain (AI model) is responsible for reasoning, planning, and decision-making. So, models with strong reasoning such as 3.7 Sonnet and Grok 3 are preferable. See the LLM Leaderboard for the comparison.

The body (tools and capabilities)is the set of actions and tools the agent can use to interact with its environment. Depending on the purpose, e.g., code, 3.7 Sonnet is currently doing well. However, the landscape is changing rapidly, and this may not be the best one for very long—this is very much a snapshot in time.

By combining these elements, agents operate with autonomy and adaptability, making them valuable for tasks ranging from automation and security to research and decision support.

1.2.2 What is an LLM

The seminal paper "Improving Language Understanding by Generative Pre-Training" never actually uses the term "LLM," as this terminology emerged years later. Instead, it introduces what is now recognize as an early LLM by describing a transformer-based neural network that learns from unlabelled text through a two-step approach: unsupervised pre-training (predicting the next word in a sequence) followed by supervised fine-tuning on specific tasks. The authors refer to their creation as a "generative pre-trained transformer" (the origin of the GPT acronym), with the concept of "Large Language Models" as a distinct category only taking hold around 2020-2022, after significantly larger models like GPT-3 demonstrated remarkable capabilities across diverse tasks.

1.2.3 What is an Agent Tool?

An agent tool is a software utility that an agent uses to perform specific tasks, such as gathering intelligence, analyzing data, or executing security operations. These tools help agents interact with their environment and automate complex workflows. Tools can be anything from functions wrapping requests and API calls, access to OS-level tools with subprocess, or even a simple Python function that adds two (2) numbers together. We will look more into this when building the agent later in this post.

1.3 Agentic Frameworks

Several modern frameworks support the development of multi-agent systems, and they each have their own pros and cons. Whilst this isn’t a comparison blog, a few are worth noting. The table below shows a few common libraries and descriptions from their respective website/repository.

Framework | Description |

Build production agents can find information, synthesize insights, generate reports, and take actions over the most complex enterprise data. | |

AutoGen is an open-source programming framework for building AI agents and facilitating cooperation among multiple agents to solve tasks. AutoGen aims to provide an easy-to-use and flexible framework for accelerating development and research on agentic AI. | |

Streamline workflows across industries with powerful AI agents and builds and deploys automated workflows using any LLM and cloud platform. | |

Gain control with LangGraph to design agents that reliably handle complex tasks. Build and scale agentic applications with the LangGraph Platform. | |

This is a barebones library for agents, which write Python code to call tools and orchestrate other agents. |

However, for this blog, we will be looking at Model Context Protocol (MCP). Conveniently, at the time of writing this, Adam Chester provided a proof of concept using the exact setup I plan to talk about in this post. In that example, it’s used to synergise with Mythic C2 and automate Conti replication.

See here:

- https://x.com/_xpn_/status/1902848551463399504?t=KQ_Riy6RJ-d7ZphSl9UFWQ

- https://github.com/xpn/mythic_mcp

- https://www.youtube.com/watch?v=ZooTlwajQT4

With that said, we are going to look at building a simple agent that uses various tools such as ldapsearch, smbclient, nslookup, and ping to discover domain controllers on a network.

Another project worth investigating is nerve by evilsocket. The introductory post for the project is recommended reading: How to Write an Agent. This project uses yaml-based rules to orchestrate agents and is done very well. My personal favorite is webcam, which is an agent that checks an IP camera for pet activity.

1.4 The Agent: Building

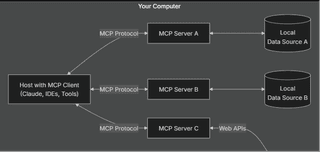

The mcp library makes this incredibly easy to define. However, it can get a little complicated architecturally, so it is recommended to review the documentation. MCP follows a client-server model where a single host can connect to multiple servers.

Ultimately, MCP is a standardized framework that is designed to streamline how applications supply context to LLMs.

To begin, FastMCP is defined, giving us access to the decorators, which allow us to create the server's structure.

mcp = FastMCP("network_mapper")We have two (2) main decorators to consider:

- mcp.prompt()

- mcp.tool()

Below is how MCP defines the prompt and tool.

Name | Description |

Prompt | Prompts enable servers to define reusable prompt templates and workflows that clients can easily surface to users and LLMs. They provide a powerful way to standardize and share common LLM interactions. |

Tool | Tools are a powerful primitive in the MCP that enable servers to expose executable functionality to clients. Through tools, LLMs can interact with external systems, perform computations, and take actions in the real world. |

In this case, here is one (1) of the prompts:

@mcp.prompt()

def setup_prompt(subnet: str, objective: str) -> str:

"""

setup network mapping by subnet and objective

:param subnet: subnet to map

:type subnet: str

:param objective: the objective of the mapping

:type objective: str

:return:

:rtype: str

"""

return f"""

You are a senior penetration tester specialising in internal networks. Your primary objective is to map the network by scanning the subnet {subnet} and identify key infrastructure for Active Directory assets.

You should think, carefully, step by step, in order to complete the objective with reasoning divided in Thought, Action, and Observation.

Firstly, reflect on the objective, then if required, execute any tools you have access to on the target subnet {subnet} and report your findings.

Your objective is to: {objective}

"""The idea with this prompt is to use the ReAct introduced in the ReAct: Synergizing Reasoning and Acting in Language Models paper. Essentially, it’s a reasoning paradigm that attempts to ground the LLM by forcing it to generate a thought, perform an action, and then reason with itself.

Tools are just as easy:

@mcp.tool()

async def run_ldap_dns_query(host: str) -> str:

"""

perform LDAP DNS query to identify domain controller.

:param host: host to query

:type host: str

:return: LDAP DNS query results

"""

command = f"dig -t SRV _ldap._tcp.dc._msdcs.{host}"

return execute_os_command(command)In this case, we use a subprocess in Python to execute the command. What’s great with this logic is that the server only really cares for the function's input arguments and docstrings. So, the function itself can contain as much code as required to handle the tool's goal. Another thing here could be what we saw Chester do, which was wrap a C2 callback into this function, giving Claude access to the API.

And that’s it for defining. Obviously, it can get more complex, but this is enough for today.

1.5 The Agent: Execution

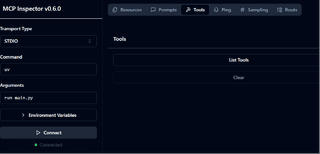

Using the Claude Desktop, MCP servers can be easily configured. To debug an MCP server, modelcontextprotocol/inspector can be used to provide access to components of the server. Running the following command lets us access the server at localhost:5173:

npx @modelcontextprotocol/inspector uv run main.pyNote: alternatively, something like 5ire could also be used.

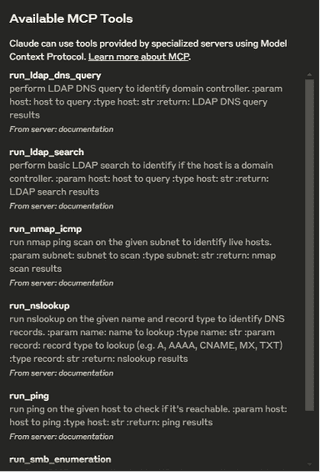

From within the debug UI, we have access to prompts, tools, etc.

Each tool is accessible and callable from here. When a host is passed into this tool, it simply fires it off, as seen below.

Using the Claude Desktop, clicking the hammer icon shows us the connected tools:

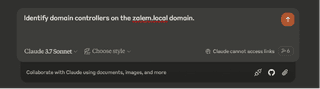

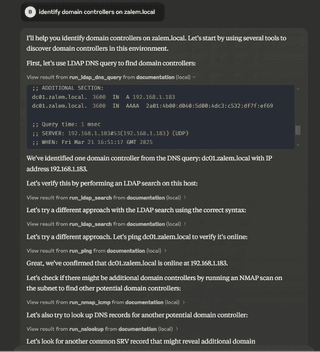

To start, simply query Claude as normal:

As this executes, it will show the output of the tools as well as any adjustments it makes along the way. In the following image, Claude can be seen using LDAP DNS records to identify the domain controller. Then, it goes through a loop of pinging and additional DNS queries until it is happy with the result.

When it’s done, it provides a summary of how and why it did what it did!

That’s a simple implementation of a singular agent. This can be expanded to do all sorts of things such as password spraying, Kerberoasting, anything. Assume Impacket which may require virtual environments for Python, then that can be set up server-side and the tool logic can handle any of that, providing simple execution to the agent.

1.6 Llama Index: An Alternate

The process for Llama Index is very similar, but it gives us more control. In the image below, we are specifying Ollama:

llm = Ollama(

model="llama3.1:8b", base_url="http://192.168.1.218:11434", request_timeout=120.0

)

Settings.llm = llm

agent_prompt = PromptTemplate(

"""…"""

)

agent = ReActAgent.from_tools(

tools=tools,

llm=llm,

verbose=False,

max_iterations=20,

system_prompt=agent_prompt,

)Tools are defined the same way, just without the decorator:

def run_ldap_dns_query(host: str) -> str:

"""

perform LDAP DNS query to identify domain controller.

:param host: host to query

:type host: str

:return: LDAP DNS query results

"""

command = f"dig -t SRV _ldap._tcp.dc._msdcs.{host}"

return execute_os_command(command)

def get_tools():

return [

FunctionTool.from_defaults(fn=run_nmap_icmp),

FunctionTool.from_defaults(fn=run_nslookup),

FunctionTool.from_defaults(fn=run_ldap_dns_query),

FunctionTool.from_defaults(fn=run_ping),

FunctionTool.from_defaults(fn=run_smb_enumeration),

FunctionTool.from_defaults(fn=run_ldap_search),

FunctionTool.from_defaults(fn=run_password_spray),

FunctionTool.from_defaults(fn=upload_test_file),

]The thing that makes this implementation more appealing is that an additional client isn’t required, it can just be called:

response = agent.chat(query)1.7 Conclusion

The goal of this post was to create a simple agent using MCP and Claude. The next step up would be multi-agent systems to orchestrate multiple agents. For example, in this blog we did a network mapper, so perhaps the next step would be reporting or exploitation.