From RAGs to Riches: Using LLMs and RAGs to Enhance Your Ops

1.1 Introduction

In this blog, I will explore Retrieval-Augmented Generation (RAG) and how it can be applied to research capabilities. RAG is a framework that integrates retrieval-based models with generative AI to provide accurate and context-aware responses by storing and retrieving snippets of relevant information prior to prompting.

1.2 LLMs and RAG

Before jumping into the code and use cases, we must first define what LLM and RAG are. OpenAI defined Large Language Models (LLMs) in their 2018 paper Improving Language Understanding by Generative Pre-Training. LLMs are trained on huge datasets to predict the next word in a sequence, which enables them to generate coherent and contextually relevant responses.

Traditional LLMs rely solely on pretrained knowledge, which can lead to outdated or incomplete responses, especially in specialized domains. RAG addresses this limitation by integrating retrieval-based models with generative AI. This allows LLMs to access external knowledge sources, ensuring responses are more accurate and context aware. Unlike standard LLMs, which generate text purely from internal weights, RAG actively retrieves relevant information, keeping outputs grounded in up-to-date and domain-specific data. This approach was demonstrated in Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks, which explores RAG’s definition and its role in Natural Language Processing (NLP). Below is an example workflow of a RAG system:

1.3 RAG Use Cases

There is a plethora of use cases for these systems, and some are much better than others. For example, we attempted to use a RAG system to identify new methods of code execution by feeding it infosec blogs/papers and Microsoft documentation. However, it was never consistent in its responses and often made things up (as LLMs do). There is an element of fine-tuning here, but we want to explore some other offerings.

We are going to discuss the following use cases:

- Research assistant

- Research generation

- Target-specific tasking

Note, all prompts were tested with these three (3) models. This blog is not aimed at comparing the models; this is purely a callout of the ones used during testing.

For more information on uncensored models, see Uncensored Models, llama2-uncensored, and llama3-8b with uncensored GuruBot prompt.

A common use for ChatGPT is asking questions like:

"How do I implement bubble sort in Python?"

However, things get far more interesting when you start asking questions that require domain-specific knowledge.

For example, in Categorising DLL Exports with an LLM, I cloned MicrosoftDocs/sdk-api, which is provided in markdown format. This is perfect for RAG applications, as it eliminates the need for web crawling and allows us to directly incorporate structured documentation into our database.

With this data readily available, querying provides a much smoother experience than searching Google or MSDN manually. Anyone who has struggled to navigate MSDN’s documentation knows how frustrating that can be.

This highlights one (1) of the most widely accepted use cases of RAG: “talking to your documents.” This approach is especially relevant in infosec, aligning closely with the concept of a research assistant. Great examples of this are Cinnamon/kotaemon and weaviate/Verba, an open source, RAG-based tool designed for interacting with documents in a conversational way.

Consider a scenario where you have read a new blog like Secure Enclaves for Offensive Operations and want to query documents for further resources at scale whilst also concisely collating information. By ingesting the entirety of MSDN, along with transcripts from security talks and relevant blog posts, you could create a knowledge base that enables precise, contextual responses—far more efficient than manually sifting through scattered resources.

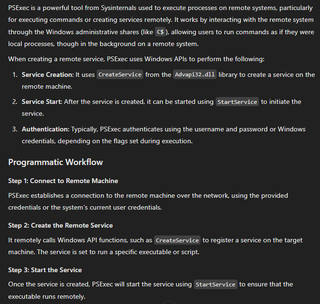

As an example, I asked llama3, deepseek-1, and ChatGPT GPT-4o how PSExec creates a service. Out of these, ChatGPT gave the most concise and technically accurate response.

The code generated by ChatGPT:

#include < windows.h >

#include < tchar.h >

#include < iostream >

int main() {

SC_HANDLE schService;

SC_HANDLE schSCManager;

TCHAR szServicePath[] = _T("C:\\path\\to\\your\\executable.exe");

// Open a handle to the Service Control Manager

schSCManager = OpenSCManager(

NULL, // Local machine

NULL, // ServicesActive database

SC_MANAGER_CONNECT | SC_MANAGER_CREATE_SERVICE // Access rights

);

if (schSCManager == NULL) {

std::cerr << "OpenSCManager failed. Error code: " << GetLastError() << std::endl;

return 1;

}

// Create a new service

schService = CreateService(

schSCManager, // SCM database

_T("MyRemoteService"), // Service name

_T("Remote Execution Service"), // Service display name

SERVICE_ALL_ACCESS, // Desired access

SERVICE_WIN32_OWN_PROCESS, // Service type

SERVICE_AUTO_START, // Start type

SERVICE_ERROR_NORMAL, // Error control

szServicePath, // Service binary path

NULL, // Load ordering group

NULL, // Tag ID

NULL, // Dependencies

NULL, // Account name

NULL // Password

);

if (schService == NULL) {

std::cerr << "CreateService failed. Error code: " << GetLastError() << std::endl;

CloseServiceHandle(schSCManager);

return 1;

}

// Start the service

if (!StartService(schService, 0, NULL)) {

std::cerr << "StartService failed. Error code: " << GetLastError() << std::endl;

CloseServiceHandle(schService);

CloseServiceHandle(schSCManager);

return 1;

}

std::cout << "Service started successfully!" << std::endl;

// Clean up

CloseServiceHandle(schService);

CloseServiceHandle(schSCManager);

return 0;

}And finally, a PSExec command:

psexec \\remote-computer -u username -p password -h -d "C:\path\to\your\executable.exe"The point of this demonstration is to show that LLMs have their own pros and cons. Typically, the Ollama LLMs performed quite poorly at answering research-based questions, but I did not pull the entire models—only the smaller, more feasibly sized ones.

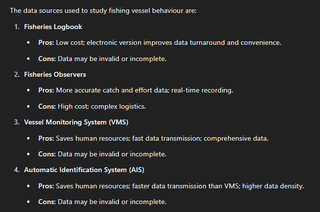

As most RAG applications focus on querying your documents, let’s try that. Here, we will give it Application of Artificial Intelligence in the Study of Fishing Vessel Behavior.

Table 1 in the paper lists four (4) data sources:

- Fisheries logbook

- Fisheries observers

- VMS

- AIS

I asked all three (3) models again the same question, and ChatGPT gave the most accurate and concise answer. Conciseness is important here, as deepseek tended to be the longest by far.

An additional use case, specifically for local LLMs, is being able to ingest information per engagement. For example, say you execute AtlasReaper to extract information from Jira and Confluence, or you hit a treasure trove of documentation on SharePoint or file shares.

In this case, you could start asking questions from the documents, like:

“How does client onboard new starters?”

Or:

“Which servers are responsible for SWIFT?”

It would be even simpler to use the python Wikipedia package and something like Firecrawl (more on this later) to create targeting passwords or subdomains.

1.4 Development

With all of that out the way, let’s look at building a RAG application with Python and langchain.

1.4.1 Data Collection and Processing

Once the purpose of the system is defined, the first thing to do is to hunt the ingestion sources. For example, if the input data is PDFs, then langchain has a function to directly handle that: PyPDFDirectoryLoader. If it’s markdown, then the function is UnstructuredMarkdownLoader. For a full list, including even social networking-based loaders, see document_loaders.

Markdown was the most prominent for me, as I was using Firecrawl, which can “Turn websites into LLM-ready data” and is very simple to put together. So, for example, I point it towards a blog, extract the blog as markdown, and feed it into the database with UnstructuredMarkdownLoader.

Here is an example script to download a blog to markdown:

from firecrawl import FirecrawlApp

def load_dotenv_file():

d = {}

with open(".env") as f:

for line in f:

key, value = line.strip().split("=")

d[key] = value

return d

def get_firecrawl_data(url):

dotenv = load_dotenv_file()

app = FirecrawlApp(api_key=dotenv["FIRECRAWL_API_KEY"])

response = app.scrape_url(url=url)

return response

for link in tqdm(blog_links, desc="Downloading blogs"):

try:

name = link.replace("https://trustedsec.com/blog/", "").replace("/", "")

filename = str(output_directory / f"{name}.md")

if Path(filename).exists():

continue

response = get_firecrawl_data(link)

markdown = response["markdown"].encode('utf-8', 'ignore').decode('utf-8')

with open(filename, "w", encoding="utf-8") as f:

f.write(markdown)

sleep(10)

except Exception as e:

print(f"Failed to download {link}: {e}")In Obsidian, Taming a Collective Consciousness,Sam discusses how targeted ops use Obsidian to share information. This is perfect for this scenario, as the entire knowledge we maintain can be ingested into a single application.

Once the information is gathered, we can now look at loading it up and splitting it into chunks so that we can match specific segments of the documentation. For this, we will use Chroma, as it’s simple and aimed at this kind of work. Firstly, we need to use the loader; in this case, I will just use the PyPDFLoader to load a single file:

loader = PyPDFLoader(args.paper)

data = loader.load()Next, we split the document up into chunks. This is done with RecursiveCharacterTextSplitter:

def split_documents(raw_documents):

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=2000,

chunk_overlap=200,

length_function=len,

is_separator_regex=False,

)

chunks = text_splitter.split_documents(raw_documents)

return chunksNote, this has a lot of room for optimization. I would recommend playing around with it, but we will leave it as is for now.

Finally, when it’s chunked, we can store it in Chroma (note OllamaEmbeddings, which we will revisit shortly):

def add_to_chroma(chunks):

db = Chroma(persist_directory="chroma", embedding_function=OllamaEmbeddings(model="nomic-embed-text"))

existing_items = db.get(include=[])

existing_ids = set(existing_items["ids"])

new_chunks = [chunk for chunk in chunks if chunk.metadata["id"] not in existing_ids]

if new_chunks:

batch_size = 150 # Safely under the 166 limit

for i in tqdm(range(0, len(new_chunks), batch_size), desc="Processing batches"):

batch = new_chunks[i:i + batch_size]

batch_ids = [chunk.metadata["id"] for chunk in batch]

db.add_documents(batch, ids=batch_ids)This will create a “chroma” directory, like so:

Below, we are opening this up in DB Browser for SQLite, you can see the chunked text:

One (1) thing we quickly jumped over was the embedding function. Embedding functions convert text into vectors, which allows for efficient similarity searches. nomic-embed-text is an open source model by Nomic AI that offers high-performance text embeddings. Alternatively, OpenAI’s text-embedding-ada-002 is an option.

1.4.2 Querying the data

Accessing the data is quite straightforward with Chroma. Before doing anything else, we need to search for similarities. This can be done like so:

db = Chroma(persist_directory="chroma", embedding_function=OllamaEmbeddings(model="nomic-embed-text"))

results = db.similarity_search_with_score(query_text, k=k)Here, we define Chroma with the embedding and pass the query and our k value to similarity_search_with_score. Next, we want to structure the content. This code snippet is joining each chunk ad storing the similarity score:

unique_results = {}

for doc, score in results:

if doc.page_content not in unique_results:

unique_results[doc.page_content] = (doc, score)

context_text = "\n\n---\n\n".join([f"{doc.page_content}\n\nScore: {_score}" for doc, _score in unique_results.values()])Now, we are ready to build out our prompt. To do that, ChatPromptTemplate was used, which passes the context and the question to the prompt. Below is an example prompt using an Alpaca Method.

PROMPT_TEMPLATE = """

You are a academic professor and your task is to read papers and analyse its content.

### Instruction:

Analyse the content of the paper and provide answers from it based on the input. If the answer is not in the context provided, state that and do not attempt to answer. The reponse must be short and concise using only active language, prioritise sources with the highest score.

{context}

### Input:

{question}

### Response:

"""When this is all put together, the prompt looks something like this (snipped):

You are a academic professor and your task is to read papers and analyse its content.

### Instruction:

Analyse the content of the paper and provide answers from it based on the input. If the answer is not in the context provided, state that and do not attempt to answer. The reponse must be short and concise using only active language, prioritise sources with the highest score.

of fishing vessel behavior. In particular, with the help of AI algorithms to analyze and

mine AIS data, a series of achievements have been made in the fields of maritime traffic

safety, fishing vessel positioning, fishing ground research, fishing vessel classification,

fishing behavior identification and detection of ship anomalies [ 13]. It can be said that the

emergence of AIS has ushered in the digital era of marine governance, port and shipping

operations and management [14].

With the continuous development of remote sensing technology and geographic

information technology, the acquisition of fishing vessel behavior data is more accurate and

comprehensive. Although the data obtained by each method are not absolutely accurate

and comprehensive, the data are sufficient to provide researchers with a basis for research

on fishing vessel behavior. This paper summarizes and compares the advantages and

disadvantages of the above methods for obtaining fishing vessel behavior data, as shown

in Table 1.

Table 1. Comparison of advantages and disadvantages of various data sources that study fishing

vessel behavior.

Method Advantage Disadvantage

Fisheries Logbook

• The cost is relatively low.

• The electronic version can greatly reduce data

turnaround time and improve convenience.

• The data may be invalid.

• The data may be incomplete.

Fisheries Observers

• The catch and effort data collected were more accurate.

• Record the data at any time.

• The cost is relatively high.

• The logistics are complex.

VMS

• Save human resources.

• The speed of receiving and sending data is faster.

• The obtained data are more comprehensive.

• The data may be invalid.

• The data may be incomplete.

AIS

• Save human resources.

• The speed of receiving and sending data is faster.

• The obtained data are more comprehensive.

• Higher than the numerical density obtained by VMS.

• The data may be invalid.

• The data may be incomplete.

Score: 0.3190699627151529The final step is to send the prompt; in this instance, it’s just an Ollama LLM:

model = OllamaLLM(model=model)

response_text = model.invoke(prompt)

sources = [doc.metadata.get("id", None) for doc, _score in unique_results.values()]

sources = sorted([source for source in sources if source is not None])

console = Console()

response_markdown = Markdown(response_text)

response_panel = Panel(response_markdown, title="Response", border_style="green")

sources_panel = Panel("\n".join(f":page_facing_up: {source}" for source in sources), title="Sources", border_style="blue")

console.print(response_panel)

console.print(sources_panel)That’s it! Putting a bow on this is easy—you could use a custom application, utilize the ChatGPT UI, or just be generally creative.

1.5 Conclusion

A good RAG system is dependent on quite a few factors. Building something in-house gives you flexibility but will take a lot more time. Additionally, fine-tuning it over time will be the best course of action, as it takes some getting used to. It’s also worth mentioning that each LLM provided its own pros and cons, so depending on the objective, it may be worth flipping between each use case. However, it is common to use several LLMs to answer questions and then use another to QA that—Multi-Agent Systems.