PCI DSS Vulnerability Management: The Most Misunderstood Requirement – Part 2

Risk Ranking

This is part two (2) of a three (3) part series on PCI DSS version 4.0 requirement 6.3.1, for identification and management of vulnerabilities. This requirement is one (1) of the most misunderstood PCI DSS requirements and has a large impact on compliance programs because it is referenced in 10 other requirements.

Part one (1) covered the vulnerability identification process described in requirement 6.3.1. This part addresses the other half of requirement 6.3.1, related to risk ranking. Part three (3) will address other requirements that requirement 6.3.1 impacts.

It’s worth reviewing part one (1) if you are not sure what requirement 6.3.1 is about or are confused as to why it’s a topic worth exploring.

Risk Ranking Process

Once an organization has identified vulnerabilities in their commercial, open-source, bespoke, and custom software, they must determine how to address the vulnerabilities. PCI DSS requirement 6.3.1 requires using a risk ranking system to determine how to address these vulnerabilities but leaves it to each organization to define the specifics of their own risk ranking approach.

Most vulnerabilities with a Common Vulnerabilities and Exposures (CVE) number in the National Vulnerability Database (NVD)[1] will be assigned a Common Vulnerability Scoring System (CVSS) score. This scoring system indicates how severe a vulnerability is on a scale from 0 to 10 based on how difficult it is to exploit a vulnerability and the potential impact a vulnerability could have if exploited.

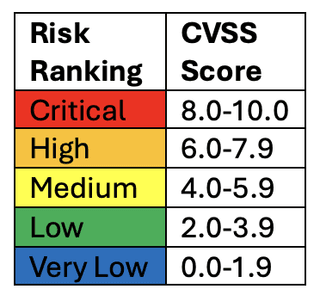

Some organizations try to base their entire risk ranking program on the CVSS score of a vulnerability, essentially assigning risk rankings to certain CVSS score ranges, for example:

While CVSS is a valuable tool, and this simple example ranking system could meet requirement 6.3.1 in certain circumstances, a major flaw in this approach is that some vulnerabilities aren’t assigned CVSS scores. This is often a problem for vulnerabilities that are not in the NVD and do not have assigned CVE numbers, e.g., vulnerabilities in bespoke or custom software[2]. Organizations using a CVSS-based ranking system will either need to calculate CVSS scores for unscored vulnerabilities or develop their own risk ranking criteria that is not based on CVSS scores. Both of these approaches are described below.

Another major drawback to a simple CVSS-based system is that it does not take the unique circumstances of each organization into account. Organizations may be frustrated as a simple CVSS-based approach to risk ranking combined with the requirement 6.3.3 mandate to install patches for critical and high vulnerabilities within one (1) month forces them to quickly apply a disruptive patch for a vulnerability that they feel is not very serious. This often comes up when the affected system component itself is not very critical, even if the vulnerability is, or because the vulnerability would be more difficult to exploit in a specific environment due to certain configuration parameters.

There are two (2) common solutions that can solve the issues with a simple CVSS-based ranking system, each covered in more detail below:

- Using the CVSS calculator to adjust CVSS scores based on optional metrics

- Creating custom risk ranking criteria not based on CVSS scores

Creating custom criteria is the more flexible option compared to creating and/or adjusting CVSS scores, as the organization can tailor a ranking system to their specific environment and needs. Using the optional CVSS metrics is a more rigid approach, but the calculator does more of the work of assigning a score and the organization does not need to put in the effort to develop a custom system that is aligned with industry best practices.

Regardless of which method is used to assign risk rankings to vulnerabilities, it must include 'high' and 'critical' levels at a minimum. Many organizations use a five (5) level ranking system with the following ranks:

- Critical

- High

- Medium (or Moderate)

- Low

- Very Low (or None)

Some organizations will omit the Very Low rank for a four (4) level system. Organizations are free to choose their own levels, but it is important to have Critical and High (or equivalents), as these are referenced in many PCI DSS requirements as described in part three (3).

Creating CVSS Scores

An organization using a CVSS-based approach can use the CVSS calculator to determine a CVSS score for vulnerabilities that have not been assigned a published score. TrustedSec recommends organizations use the same scoring system as the NVD, which is currently CVSS version 3.1. CVSS version 4.0 has been released and will be appropriate to use once the NVD transitions to the new version.

To calculate a CVSS score, organizations must complete the Base Score Metrics criteria and may optionally complete the Temporal Score Metrics. Information to complete these metrics should be derived from the available information about a vulnerability. The policy of the NVD is to assume the worst for any mandatory criteria (i.e., Base Score Metrics) that are not available when scoring a vulnerability, and organizations should adhere to this when creating their own scores.

The Base Score Metrics are the core criteria of any vulnerability and tend to stay constant throughout the lifecycle of a vulnerability. The criteria include:

- Where an attacker must be to exploit the vulnerability

- The complexity of the attack

- The privileges an attacker must have

- Whether user interaction is required to exploit the vulnerability

- Whether the vulnerability impacts resources in other components (scope)

- The potential level of confidentiality, integrity, and availability impact of the vulnerability

The optional Temporal Score Metrics adjust the score based on criteria that tend to change over time. These include:

- The status of known exploits

- The status of remediation techniques

- Confidence in the report

Full information on each criterion is available in the FIRST CVSS specification documents for both CVSS 3.1and CVSS 4.0

Organizations should note the metrics used to assign a CVSS score to a vulnerability so the score can be easily recalculated later if the criteria change. The CVSS specification documents describe a standard process for documenting the metrics, known as a CVSS vector string. For example, the vector string CVSS:3.1/AV:A/AC:H/PR:L/UI:N/S:U/C:H/I:L/A:N/E:P/RL:W/RC:C describes a vulnerability scored using CVSS version 3.1 with the following metrics:

- Attack Vector: Adjacent

- Attack Complexity: High

- Privileges Required: Low

- User Interaction: None

- Scope: Unchanged

- Confidentiality: High

- Integrity: Low

- Availability: None

- Exploit Code Maturity: Proof-of-Concept

- Remediation Level: Workaround

- Report Confidence: Confirmed

The reader may try this out by entering this information into the CVSS version 3.1 calculator linked above, the result should be a base score of 5.4, a temporal score of 5.0, and an overall CVSS score of 5.0. The parameters can be adjusted to see how they impact the score, e.g., if an official fix were released, we could adjust the Remediation Level to reflect this which drops the overall CVSS score to 4.9.

Environment Adjustments to CVSS Scores

The CVSS is designed to allow organizations to adjust CVSS scores based on the details of their environment. This is conducted using the optional Environmental Score section of the CVSS calculator described above. I recommend anyone not familiar with the CVSS calculator open it up and try it out with a vulnerability like CVE-2024-20305 while reading this next part.

To start, an organization will need to fill in the CVSS calculator with the metrics that were originally used to assign a score. The vector string, described above, should be available for any vulnerability in the NVD that has been assigned a CVSS score and can be used to set the Base and Temporal (if available) metrics. Make sure to use the version of the CVSS calculator that aligns with the original score. The CVSS version 3.0 calculator is available from within the version 3.1 calculator, linked above, and a CVSS version 2.0 calculator is also available for older vulnerabilities.

The next step is to complete the Environmental Score Metrics based on the organization’s affected system components. As with the Base Score and Temporal Score metrics, the Environmental Score Metrics are described in the CVSS specification documents. As with any CVSS score calculated by an organization, be sure to note the CVSS vector string for the adjusted scope so it can be re-created and adjusted further if necessary.

Most of the CVSS version 3.1 Environmental Score Metrics modify the Base Score Metrics. For example, if a vulnerability’s Attack Vector was originally set to Adjacent Network in the Base Score Metrics but the affected system component is not connected in any way to any network, it may be appropriate to adjust the Attack Vector to Local in the Environmental Score Metrics. This change will override the corresponding Base Score Metrics and the CVSS score will be updated to reflect these changes.

The Impact Subscore Modifiers are the only CVSS version 3.1 Environmental Score Metrics that are in addition to the Base Score Metrics. These reflect the confidentiality, integrity, and availability requirements of the affected system. For example, if a vulnerability’s Confidentiality Impact in the Base Score Metrics is High but the affected component contains information that does not require high confidentiality protection, the Confidentiality Requirement can be set to Low in the Environmental Score Metrics. In this case, the CVSS score will drop as the high impact on confidentiality is irrelevant on a system with a low confidentiality requirement. Alternatively, setting the Confidentiality Requirement to High would increase the CVSS score as the information on the affected system is more sensitive to the potential loss of confidentiality via the exploitation of the vulnerability.

CVSS version 2.0 uses different Environmental Score Metrics that include:

- Potential for collateral damage

- Target distribution

- Modifiers for confidentiality, integrity, and availability

Custom Risk Ranking Criteria

Organizations that want more flexibility than is possible with CVSS scoring can create their own risk ranking system to meet requirement 6.3.1. An organization’s creativity is limited only by what they think a reasonable Qualified Security Assessor (QSA) or Internal Security Assessor (ISA) will approve during an assessment in light of the requirement that the ranking system be based on 'industry best practices'. As a QSA, I look for a process based on a known risk assessment methodology with enough detail and objectivity so that different individuals could arrive at approximately the same risk ranking given the same information and that the resulting ranking reflects the relative severity of vulnerabilities.

The system defined below is an example of how a fairly comprehensive system that takes likelihood, impact, system criticality, and mitigating factors into account could be defined. This system is not intended to be appropriate for any specific organization.

The example system works as follows:

- Assign initial risk rankings to vulnerabilities based on the likelihood of a vulnerability being exploited and the potential impact the exploit would have.

- Adjust the risk rankings to reflect the criticality of the potentially affected system components.

- Finalize the risk rankings by reflecting security controls and other mitigating factors at the organization that may interfere with exploitation of vulnerabilities, with or without CVSS scores.

Initial Risk Assessment

Likelihood and impact are the core criteria for any risk assessment. Likelihood reflects how likely an event is to occur in a given time period, or how frequently the event can be expected to occur in a time period. Impact reflects how much damage an event would have if it occurred, regardless of how likely (or unlikely) it is to occur.

Combining likelihood and impact allows us to compare a variety of risks with the most likely and impactful having the highest rankings and the least likely and impactful having the lowest rankings. Most of the heavy lifting of a risk assessment system is done in the middle ranks where rare risks with potentially enormous impact need to be compared against events that occur very frequently but have almost negligible impact.

Some factors relevant to likelihood include:

- Has proof-of-concept code or exploit code been published for the vulnerability?

- How difficult is it to exploit the vulnerability?

- Is the vulnerability known to be actively exploited by malware or other attackers?

- Can the vulnerability be exploited over a network, or must it run on the affected component?

- Are escalated privileges required to exploit the vulnerability?

- Is user interaction required to exploit the vulnerability?

Some factors relevant to impact include:

- Can the exploit provide initial access to a system?

- Can the exploit provide privileged access to a system?

- Can the exploit result in unauthorized release of data?

- Can the exploit result in unauthorized modification of data?

System Criticality

The criticality of systems will be unique to each organization: An organization that stores millions of CHD records will have a very different profile from an organization that never stores CHD and only processes a few dozen transactions per day. Each organization should create a definition of criticality that places the system components that have the least ability to impact the security of account data at the lowest level and the system components that have the most ability to impact the security of account data at the highest level.

Some factors relevant to system criticality include whether system components:

- Store account data and how many records are stored

- Process or transmit unencrypted account data

- Have or manage the decryption keys for encrypted account data

- Provide security services for the CDE

- Can otherwise connect to or affect the security of the CDE

Mitigating Factors

Adjusting the risk rankings based on controls and other mitigating factors will account for how exploitation of the vulnerability may be affected by the unique details of the organization’s environment. For example, a vulnerability that requires user interaction to exploit would be much easier to exploit on a user’s workstation than on a server that is accessed only rarely by system administrators for maintenance purposes. Common considerations include:

- Have workarounds for the vulnerability been implemented on the affected component?

- What networks are the components accessible from?

- How many other components are on the same network as the affected component?

- Are users regularly accessing the affected component?

- Is there a cluster of similarly affected components?

- What network security controls are in place on the affected components?

- What endpoint security controls are in place on the affected components?

Assigning Ranks

Matrices can be useful tools when assigning risk rankings based on the criteria above. Take for example a vulnerability that has been determined to have:

- Medium likelihood

- High impact

- Affects a High criticality system

- Low mitigation

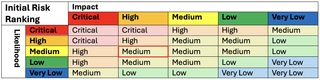

A matrix can be used to assign initial risk rankings based on likelihood and impact:

The initial risk of the vulnerability would be Medium, based on the intersection of Medium likelihood and High impact.

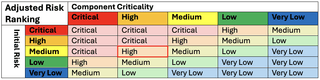

Another matrix can be used to adjust the risk rankings based on the criticality of the affected components:

Using this matrix, we would adjust our Medium risk vulnerability to High risk due to the high criticality of the system.

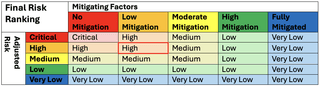

Taking this a step further, another matrix can be used to arrive at final risk rankings when taking security controls and other mitigating factors into consideration:

Our final risk ranking would be High due to the Low mitigation of this vulnerability.

These scales and matrices are suggestions, and organizations should change them to determine and weigh impact, likelihood, component criticality, and mitigating factors based on their own environment and risk tolerance.

Documenting the Process

The criteria used to rank risks must be documented, regardless of whether CVSS scores or custom criteria are used. Create a procedure that describes how to complete the risk ranking process step by step, including any criteria or matrices used during the process. Also create a policy that requires the procedure to be followed for all newly identified vulnerabilities and assigns responsibility for completing the process to a specific person.

Care should be taken to name the industry best practices that the risk ranking methodology aligns with and describe how the methodology aligns with those practices. CVSS is a well-known industry best practice, more documentation should be provided for custom risk ranking methodologies.

The risk rankings that result when this process is carried out for a new vulnerability must also be documented as the PCI DSS testing procedures for requirement 6.3.1 call for the assessor to review this documentation.

[1]See part one (1) to learn what these are.

[2] PCI DSS defines Custom Software as “software developed by the entity for its own use” while Bespoke Software is “developed by a third-party on behalf of the entity and per the entity’s specifications.” Essentially, this covers any software that is not open source or bought off-the-shelf.