Measuring the Success of Your Adversary Simulations

Adversary Simulations (“AdSim” or “Red Teams”) represent a serious commitment on the part of an organization. In the United States, AdSim engagements are typically not required by industry standards in the private sector. Penetration tests are required for PCI compliance, but not Red Teams, which are often more elaborate offensive engagements and, let’s face it, more costly. In 2025, if a client comes to us and requests an AdSim engagement, we already know that they likely have several years of offensive testing under their belt and are truly interested in improving their security posture for its own sake, not the sake of a regulator. The years of having to continuously define Red Team vs pentest are essentially over. Clients know what they want and have a much better understanding of what they are ready for.

Chances are you, dear reader, are either a third-party provider of AdSim engagements (like TrustedSec), an organization that consumes those third-party services, or a member of an organization that provides and consumes, such as from an internal Red Team. In all three cases, it is useful to know how to measure the overall success of the engagement, which costs a lot of time and money. As one who is also a consumer of other services, I want to know that every dollar for myself or on behalf of my company is being spent in a way that extracts the most value for that dollar.

So how do we measure success? As with all difficult questions, a good starting place is in the definition of terms. In this case, success in AdSim engagements can be difficult to pin down. What does that truly mean? Is “success” determined by the number of findings? Perhaps the quality of the attack path? What about stealthiness, new detections written, objectives achieved, and so on? What about qualitative reasons such as the helpfulness and professionalism of the provider? The answer to all the above is yes and more. In its simplest form, success is when what you want to happen matches what actually did happen. If I attempt a shot from the free-throw line, I want the ball to go in. I expect the ball to go in. A successful shot is when it actually does.

Lastly, before we dive into some concrete ideas, we must define what we want from a Red Team engagement. Would it be sufficient to simply state that you, the client, want a PDF report at the end, and that constitutes success? Of course not. What if the report is trash and your experience was lousy? No, we want several things from a Red Team engagement, whether explicitly stated in the SOW, or implicitly desired and expected as part of a good-faith partnership. So, let’s break down a few of these by translating our wants into goals.

A good list of AdSim goals can include the following:

- Goal #1: Test Controls: To test and measure the effectiveness of the organization’s security controls, including response time, number of alerts triggered, as well as any additional security deficiencies related to the organization’s people, processes, and technology. This testing occurs in the context of an objective-based offensive Technical Security Assessment.

- Goal #2: Defensive Enlightenment: To achieve clarity on any gaps in security controls related to the organization’s ability to prevent, detect, and/or respond to the attacker’s actions in the AdSim.

- Goal #3: Professional Courtesy: To achieve all goals in a pleasant, professional, and timely manner with a skilled provider.

These are of course high-level goals, but I think that’s a reasonable summary of what all parties expect of a successful AdSim engagement. Beyond just a simple gut feeling at the end, how then can you tell if they are achieved? Let’s break down these goals into more useable targets and metrics.

1.1 Goal #1: Test Controls

At its core, Red Teams are about the calculated and skillful probing of an authorized target’s weaknesses. We want to see by what methods a skillful attacker can reach target objectives and evade security controls.

It is crucial for the target organization to have properly defined objectives prior to the start of the test that are then communicated to the tester. These objectives should be chosen with controls (or gaps) in mind. What this means is that if you cast too wide a net for a test objective, (e.g., “just get domain admin”), it’s likely that a control that you want to be tested will end up being skipped in advertently. For example, if you just made changes to your email security gateway, it is perfectly fine to tell the tester that an objective is to attempt to acquire code execution through phishing.

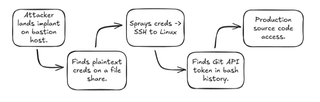

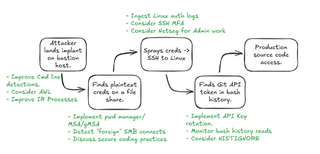

In a more specific example, say the organization just made changes to the EDR policy and introduced new authentication standards for its internal GitLab environment. A great test objective might be to see if the tester can acquire write-access to production source code. I have received this as an objective many times, and the attack path is always illuminating.

Assume the tester accomplishes the objective using the following attack path:

In most environments, a veritable anthology of preventative and detective controls will have been encountered and evaded (or not) during that single attack path. It is also possible (read: likely) that the organization will be presented with one or more control gaps that led to such an outcome.

Over the course of the test, a list of control deficiencies is documented, and evidence of detection should be provided to the tester for any of the steps along the attack path. This type of collaboration is vitally important and is an indicator of a successful engagement. From this information, several meaningful metrics can be determined, for example Mean Time To Detect (MTTD) and Mean Time To Respond (MTTR). Without two-way collaboration, this knowledge is often lost. It may very well be that the organization does not wish to share alert information with the tester. This is fine, but it typically belies underlying insecurities, usually in the form of weakened trust between internal teams. I see this type of communication breakdown normally when one internal team is “testing” another without their knowledge (internal audit, for example). Yet I digress. If this information is not relayed to the third-party tester, then it should be retained for internal metrics.

So, making this practical, here are some useful questions to help determine the success of the control test:

1) Preventative Control Validation

- Did the Red Team encounter the preventative controls you expected them to?

- Did the controls correctly stop the Red Team from achieving the technical objectives?

- Were any potential configuration changes to preventative solutions noted for follow up?

2) Detective Control Validation

- Were the correct alerts thrown, indicating preventative action had been taken?

- Was the Red Team detected along various parts of the attack path? Note that it is not sufficient to simply say: “Well, we would have caught you here and therefore the rest of the attack is invalid”. Ideally, there would be multiple detections along the entire kill chain.

- Were these detections accurate? In other words, was there a low rate of false negatives or positives?

3) Technical Acumen

- Did the Red Team provide sufficient technical expertise and effort to reach the target objectives?

- Were the payloads and techniques used sufficiently advanced to provide a comprehensive technical test of the organization’s capabilities, or was only “off the shelf” commodity tradecraft used?

- Was operational continuity ensured? Were there outages or egregious mistakes leading to a loss of CIA?

A word about technical objectives: AdSim assessments are usually objective based. If all the objectives are achieved, is the test a success? If none of the objectives were achieved, was it then a failure? This question is not as straightforward as it may seem. If the objectives were achieved without sufficient collaboration with the defense team, then I’d argue, no, the engagement was not a success. Likewise, if the Red Team threw everything they had at an organization and the defense team’s processes and controls were thoroughly tested and found to be up to the challenge, I wouldn’t call the test a failure. As in so many other areas of life, the truth rarely fits comfortably.

Objectives serve as important guardrails to keep the engagement’s value high by aiming for goals that are relevant to business and security. They are not the sole determinant of success but rather serve as the frame by which success can be defined and then measured for that organization. Imagine if I was handed a list of three technical objectives and achieved all of them in total radio silence. You didn't hear a word from me, but at the end of the test, I handed you a report with a comprehensive list of truly high-quality findings. Would you conclude the test was a success? Possible, but unlikely. You would likely feel “unfulfilled” somehow, and perhaps, dare I say, used. Why? For the same reason you would feel that way if a doctor listened to you, handed you a (correct) prescription, then ushered you out of the office without a word. It’s because it is insufficient to simply be handed a solution; you want to also understand the problem, bringing us to our next goal.

1.2 Goal #2: Defensive Enlightenment

Central to my thinking on AdSim success is a principle that extends beyond technical acumen, fancy tools, and raw detection metrics. These things are of course good and useful, but the idea of success has deep roots, and there are additional factors that I think can be used to further ripen its fruit.

There is a unique event that occurs sometimes in the back half of a TrustedSec AdSim engagement (called Adversarial Attack Simulations). At some point, it is likely that a detection or alert will be thrown, and the defense will be read in to the occurrence of the test. This is when the magic starts. We will bring the defense team into a collaborative room, answer all their questions about payloads, tradecraft, and logistics, and then discuss meaningful ways to improve their detections even while we are still trying to achieve the engagement objectives. Light bulbs go on, and our role switches from adversary to teammate. I call this process defensive enlightenment, and it is my favorite part of the engagement. Well, that and shells. Love me some shellz.

Defensive enlightenment is simply the act of informing the defense team about the techniques used by the Red Team to achieve the objectives with the goal of improving their knowledge and readiness for such attacks in the future. For more advanced orgs, it is quite likely that the defense team has some awareness of what is happening and usually has somewhere between 40-60% of the puzzle already pieced together. During defensive enlightenment, we fill in any knowledge gaps remaining and begin the process of discussing preventative and detective measures that can be implemented to further harden the environment.

If done properly, the defense team will walk away not only with a comprehensive report that will refresh their memory after the test is over, but also with a list of practical action items and research ideas that extend far beyond “findings”. I’ve often said that a good defense team can mine the narrative for gems even in the absence of written findings, and it’s true.

How can this particular goal be measured? A few questions here can help provide the necessary clarity. They should all be answered “yes”.

- Was the defense team aware of the engagement at any point before the final debrief call?

- Was the defense team allowed to ask free-form questions in more of an everyday team context, not just given a brief window to ask the operator after absorbing all the information from the report?

- Were ideas to improve the organization’s security exchanged that go beyond finding remediation? This is key and, if yes, is a strong indicator that genuine collaboration has occurred.

- Did the members of the defense team feel as though the Red Team partnered with them to improve? This is akin to a sparring partner actively working to improve the prize fighter and is the Red Team’s most fundamental job.

- Did the Red Team make themselves available outside of status calls/emails?

By the time the “official” technical debrief call occurs, the report information should have already been discussed to exhaustion as to almost render the debrief call unnecessary.

1.3 Goal #3: Professional Courtesy

The final measure of success is professional courtesy. This goes beyond simple were-they-polite-on-calls feelings and extends into some of the “unwritten rules” of behavior that Red Teamers should exhibit when dealing with sensitive environments, data, and people. It should go without saying that you don’t want to hire egotistical haxx0r rockstars who only care about one thing: themselves winning. A mature Red Team can successfully balance the competition (and yes, it is a competition) with the needs of the organization. Red Teamers do indeed want to win, and to a certain degree, the organization also wants them to win. In doing so, new deficiencies can be found and corrected. It’s a complicated emotion, however, because that means the deficiencies are there to begin with.

Yet, the overarching hallmark of a superior Red Team is their sincere and unwavering dedication to improving the security of those they are serving, even at the expense of their own ego. There is no place that this is better demonstrated than when failure occurs. We all know this instinctively, say when hiring someone to work on our homes. If things don’t go right, that’s OK, but admit the mistake and fix it so it does. It’s not how you fail; it’s how you recover.

With that in mind, aside from obvious behavioral standards such as obeying scope rules, here are a few more subtle considerations for an engagement’s success. So many of these are designed to flesh out hidden expectations that the organization may have but can be difficult to communicate up front.

1) Availability

- Did the Red Team make themselves available on an ongoing basis during the engagement, ensuring prompt replies? At TrustedSec, we normally use out-of-band communications (e.g., Signal) for rapid client response if the operation is still in a clandestine phase.

2) Transparency

- Was the operator open about personal limitations, such as capabilities (“That is new technology to me”) or scheduling, and any potential value-limiting factors? Note here that I am using value-limiting as a two-way street. Engagement value could also be limited by the organization, through an overly restrictive scope. It is incumbent on the tester to make these concerns known, even if they may be unpopular opinions.

- Was the operator forthcoming with mistakes? This is simply crucial. Offensive testing is not a risk-free process. The minute it becomes one, it is something other than a technical offensive test. While rare, mistakes, exploits, and even basic scanning can sometimes cause outages. The operator should be fully transparent and forthcoming with all commands that may have had an effect.

3) Humility

- Was information presented in such a way that no one was belittled or disrespected? This can be subtle, but the problem can be rampant amongst Red Teamers and, more recently, defenders.

- Did you get a sense that the competition was healthy overall? As I said, AdSims most definitely are competitive, and they should be, for that delivers the most value. But there is healthy competition, which seeks to produce beneficial results for the organization, and unhealthy competition, which just seeks to win. Both sides can display this attitude, and it’s malignant in either case.

- Was education emphasized over exploitation? Were concepts explained properly and without in-your-face attitude? This is a major tell, and the answer should always be yes.

- Did the tester acknowledge defensive efforts positively or just downplay them in some kind of “cool story, but not good enough” manner?

4) Data Handling & Cleanup

- Did the tester go above and beyond to ensure that sensitive data elements were handled properly and deleted securely after the engagement?

- Did the tester actively work to clean up the environment before the testing window elapsed and/or provide instructions on how to do so if not possible during the testing period?

Sometimes, these things are only noticed in hindsight, after the engagement is over, but this list should help you determine overall success of the engagement when it comes to some of the less pronounced elements.

1.4 Conclusion

It can be difficult to determine if an AdSim engagement was a success. There is no single factor that determines this, but there is a large list of seemingly inconspicuous events that occur that add up to success or failure.

I’m including a checklist of the various topics discussed in this article. While it may be helpful for an at-a-glance view, it most certainly isn’t complete, and I welcome community feedback to help make it better.

The answer to each of these questions should be “yes”:

- Were anticipated controls encountered and thoroughly tested?

- Did the controls perform as expected, both detection and prevention?

- Were the MTTD and MTTR tracked (by yourself or the AdSim provider) and within acceptable or better range for your organization?

- Were detections varied along the attack path?

- Did the detections accurately represent the actions of the tester?

- Were there no false positives?

- Were new detections written or at least postulated during the testing process?

- Were objectives reached during the test? If not, was the testing process at least as comprehensive as possible?

- Do you feel the Red Team had sufficient technical skill to accomplish the objectives?

- Did the tester adapt to the situation using custom tradecraft (if needed)?

- Was operational continuity of the business ensured throughout the engagement?

- Was the defense team included in the testing process at any point?

- Was the defense team given the opportunity to engage with the tester to sufficient technical depth prior to the final debrief call?

- Were new defense ideas raised by the AdSim operators?

- Overall, would you say the AdSim provider operated as a partner rather than just as an adversary?

- Were they available continuously throughout the testing process in a variety of channels and escalation paths?

- Were they open about their own limitations?

- Were they open about any mistakes they may have made during the testing process?

- Was the overall attitude one of humility with excellence, or did the operator simply beclown themselves with their own ego?

- Did the operator emphasize education over exploitation?

- Were defensive efforts acknowledged both verbally as well as in the report?

- Did the operator go above and beyond to ensure the environment was clean after the testing process?

- Overall, did the tester properly address and manage your expectations as best as reasonably possible?

There’s a lot there, but hopefully this provides a deep and reflective insight into your recent AdSim engagement. If you made it this far, #1 – applause, and #2 – you should be commended for taking this seriously. AdSim exercises take a lot of time, money, and effort. Manage them well, and they can provide immense benefits to the overall security health of your organization.

If you're interested in a Red Team engagement, feel free to contact our team to get more information!