Behind the Code: Assessing Public Compile-Time Obfuscators for Enhanced OPSEC

Recently, I’ve seen an uptick in interest in compile-time obfuscation of native code through the use of LLVM. Many of the base primitives used to perform these obfuscation methods are themselves over a year old, and some of the initial research that I’m aware of is over a decade old at this point.

Given the prevalence of heuristics-based detections in a variety of AV engines, I wanted to attempt to find and perform a basic pass on whether compile-time obfuscation for native code yielded decreased or increased detection rates. I wanted to approach this from a naive point of view to see if large changes in detection rates could be observed with minimal input effort. This means that I will not be diving into how to interpret and understand LLVM IR/MIR formats (intermediate formats that optimization passes are performed on) nor how to write your own obfuscator.

Throughout this post, we will also be touching on creating an LLVM-based toolchain that compiles against the Microsoft SDK similar to cl.exe. This included compiling and statically linking against the C runtime used using the /MT option instead of always depending on dynamically linking to msvcrt.dll.

If you want to skip ahead, links to the various test results are available in the appendix at the end of this post.

Environment Setup

The primary obfuscation tool for native code that I am aware of is via obfuscation passes introduced into LLVM. We will examine public passes and their effects on the detection rates of native executables.

I must convey how the environment for these compilations are created such that you, as a reader, can recreate these experiments or potentially introduce compile-time obfuscation into your workflow.

Native Code Obfuscation Setup

To set up our obfuscation toolchain, we need to complete five (5) steps:

- Install LLVM/Clang from the system repository

- Use xwin to download and set up the Windows SDK

- Validate that we can produce a functional Windows binary

- Download and modify a set of public LLVM obfuscation passes

- Compile these passes and create a template makefile for future use

- Validate that we can produce an obfuscated, functional binary

For this blog post's purposes, the environment being used will consist of a Fedora 39 machine, using a LLVM-17-based toolchain with out-of-tree plugin passes. This toolchain will be configured to utilize the Visual Studio SDK and headers directly. Acquisition and layout of the visual studio environment is performed by xwin.

To install LLVM and the clang-cl front-end, open a command terminal on a Fedora-based Linux machine and execute:

dnf install clang clang-libs clang-resource-filesystem llvm llvm-libs llvm-develThis will install LLVM and our clang-cl front-end, which will drive the compilation process. If you have not heard of clang-cl before, it is the MSVC (Microsoft Visual C++) compatible front-end for clang. When we intermix this with the SDK/headers we will download using xwin, it gives us an environment that creates executables as though you compiled it with Visual Studio on a Windows machine using clang. Note that the produced executable is different from when produced using cl.exe proper (e.g., the rich header is missing), but it will get us close enough and follows the format of what is now a drop-down option available in the foremost Windows native executable IDE.

With clang installed, we need to acquire and set up our Windows SDK. Copying the Windows SDK from a Visual Studio install and creating symlinks to deal with common differences such as file case sensitivity is possible but time consuming. Fortunately for us, xwin exists and will ease us through this process. Download the latest xwin release from GitHub. If you are using an x86-based Fedora Linux machine, you will want xwin-*-x86_64-unknown-linux-musl.tar.gz. Otherwise, you need to match the format for the host you will be running the xwin tool on.

After the download is complete, open a terminal in the directory of the extracted folder and execute the following commands to download the Microsoft SDK:

mv xwin-* /<final_home_partition>

cd <where you moved xwin to>

./xwin --arch x86,x86_64 --accept-license splat --output <final_home> --preserve-ms-arch-notationIn my case, < final_home > is /opt/winsdk.

Finally, we can test that we have created a working toolchain by making a basic message box program. The source code for this basic program, along with links to the rest of our code, is available here.

The message box code can be compiled against our clang setup using the following command:

clang-cl /winsdkdir /opt/winsdk/sdk /vctoolsdir /opt/winsdk/crt /MT -fuse-ld=lld-link test.cpp user32.libThis executable can be transferred and tested on a Windows machine. As of this writing, the executable successfully executed on a Windows 10 system. With our working compiler, it is now possible to locate and configure some out-of-tree passes to use in our obfuscation testing.

For this experiment, the obfuscation passes will be a lightly modified fork of https://github.com/AimiP02/BronyaObfus. This repository was selected because the passes are already compatible with LLVM’s new pass manager. It is also relatively easy to split up the passes such that each can be fed individually into our compilation process. The modified fork used in the tests associated with this post is available here.

To compile the obfuscation passes, we will use cmake and a Unix makefile by running the following commands:

git clone https://github.com/freefirex/BronyaObfus

cd BronyaObfus

mkdir build

cd build

cmake ..

make If you list the directory now, you should see a collection of .so files, namely libBogusControlFlow.so, libFlattening.so, libIndirectCall.so, libMBAObfuscation.so, and libStringObfuscation.so. With these shared object files, we can now introduce obfuscation passes to compilation.

Our last step is to validate that the passes we have just created can be used. First, let's create a template makefile that will assist with any future compilation work, as we’re about to have many different compilation flags. The template I created is available here. At its core, it will compile all the files in src and optionally add obfuscation passes if obf(bogus|flat|indirect|mba|str) are added to the make command line. General usage might look like:

make all obfbogus obfflatThis will make all the files under src, adding bogus control flow and flattening.

Test Setup

All code being tested for this experiment will be housed under a GitHub repository here.

The procedure is simple: create or find a code repository, compile it, and then upload the various iterations to VirusTotal to see how detection rates compare. This is not super scientific, and as the obfuscators have some degree of randomness in how they perform, it may not even be repeatable to the exact specification. For my part, though, I feel that this will give a good idea on the relationship between LLVM obfuscation passes and anti-virus detection rates.

I’ve split my tests into two (2) primary passes under the names Goodware and Malware. The Goodware pass focuses on how an obfuscation pass affects the detection of known “good” software. The presence of detections in this category represents false positives, either with the original source or introduced due to the use of obfuscation passes.

The second category, Malware, includes known open-source tools used by bad actors. Here, we’re looking more closely at the change in detection rates from the original compilation of the source. One item to note is that simply changing the compiler from what is normally used (mingw -> clang-cl, msvc -> clang-cl, etc.) already affects the detection ratio.

In all of these tests, if N/A or failed is present in a category, that means either the obfuscation pass outright failed (i.e., caused a segfault while running) or caused errors later in the linking pass.

Reasoning for each tested program was as follows:

Goodware:

- MessageBox -> Exceedingly basic test to baseline from

- SHA-256 -> A legitimate but cryptographic operation

- AES-256 -> A legitimate but cryptographic operation

- PuTTY -> Well-known program that is capable of network communications

- Capstone -> Well-known library that would perform “odd” examination of machine code

Malware:

- Arsenal Kit (Cobalt Strike) -> Specifically was a test of the artifact kit portion, which in its base form should be well detected

- Mimikatz -> “We detect Mimikatz” is a phrase used by legitimate defenders

- Throwback -> One of the first C-based open-source implants I encountered

- TrevorC2 -> Another simple, open-source, C-based agent

- COFFLoader -> While not normally run standalone from disk, the technique used has been incorporated into multiple projects

Test Results

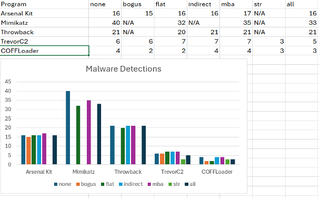

Below are the results of the tests performed. I was honestly surprised at the lack of meaningful changes in detection rates both for Goodware and Malware. The only case where a meaningful reduction occurred was with Mimikatz, where at most eight (8) detections were dropped. In most other cases, detections were +- 2. For the malware samples specifically, detections were normally reduced, although we can see an increase of detections in the TrevorC2 agent.

I find it notable that PuTTY went from a standard compilation having zero (0) detections to causing false positives when obfuscation was used. In most other Goodware samples, existing false positives were either increased or decreased seemingly randomly by the use of obfuscation.

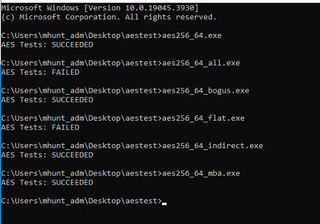

I also want to point out that the use of obfuscation passes caused the AES test suite to fail (image in appendix). I did not debug why these failures occurred, but it is a strong reminder that modifying code at compile time can introduce errors or conditions that would not normally be present. The possibility of such modifications should be strongly considered by teams looking to leverage this technology.

If you would like to see more information about the actual executables uploaded and discover if the detection rates of these samples have changed over time, I’ve included links to all of my VirusTotal uploads in the appendix.

Conclusion

I do not feel that adding LLVM obfuscation passes meaningfully impacts the detection ratio of native executables when considering disk scanning. It is entirely possible that when attempting to avoid a known signature use of LLVM obfuscation, passes could be effectively deployed to modify the machine code in such a way that either disk or memory-based scans would be defeated. I’m now of the opinion that if you want/need to use a technique and you know there are specific detections in place, then modifying the bad code manually is largely effective. In a world where ML/heuristic detections are seemingly more prevalent than static signatures, I do not believe LLVM-based obfuscation can be effectively leveraged without putting in a large initial effort to develop custom passes that are not designed to simply bypass static detections but rather target the nature of heuristic detections. Given that most of those algorithms are proprietary, developing such a pass is likely more time-consuming than it is worth for most organizations.

Appendix:

VT Scan Links:

Messagebox_Only:

SHA-256:

PuTTY:

Capstone:

AES-256:

Arsenal Kit:

Mimikatz:

- none

- bogus - failed segfault

- str - failed

- indirect - failed

- mba

- flattening

- all

TrevorC2:

COFFLoader:

Throwback:

References:

https://github.com/B-Con/crypto-algorithms/tree/master

https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

https://github.com/capstone-engine/capstone/archive/5.0.tar.gz

https://download.cobaltstrike.com/scripts

https://github.com/gentilkiwi/mimikatz

https://github.com/trustedsec/COFFLoader

https://github.com/trustedsec/trevorc2